VMware vSphere Fault Tolerance – FT

VMware Fault Tolerance or FT is a new HA solution from VMware for VMs. It is only available in vSphere 4 and above and provides much more resilient failover solution than VMware HA. One of the nice things about FT is that a VM does not need to be restarted. However, before you can start using FT for your important VMs like Domain Controllers and others here is what you need to know about Fault Tolerance?

- Similar to VMware HA, Fault Tolerance (FT) provides failover capabilities at the host level failure. So it will fail over VMs only when host in the cluster fails. It does not work across clusters or datacenters.

- FT uses vLockstep technology. vLockstep technology is the process of recording and replaying x86 instructions from Primary copy of the VM to Secondary VM. vLockstep also keeps track of the heartbeat between primary and secondary VMs.

- While vCenter or virtual center is required to configure FT. It doesn’t have to be ON during a failure event.

- You can have On-Demand Fault Tolerance for a VM.

- FT makes sure that both primary and secondary VMs are on the same host using host anti-affinity check.

- Following requirements must be met for VMs before FT can be configured:

Host must have certificate checking enabled

vmdks must be thick provisioned

All hosts must be from the same compatible process groups which support FT

No Snapshots: A VM participating in FT cannot have any snapshots

No Storage VMotion: A VM participating in FT cannot use Storage VMotion

No DRS: A VM participating in FT cannot use DRS

No SMP: A VM in FT can only have single vCPU

No RDMs: A VM in FT cannot have RDMs but it can have vRDMs

Hot-add/hot-plug is not compatible with VMware Fault Tolerance

- Upon a host failure a new Secondary VM is automatically re-spawned

- VMware HA must be on turned on in the cluster before FT can be enabled

- Host Monitoring in VMware HA is required for FT recovery process to work properly.

- FT Networking: You must have 2 different vSwitches, one for FT Logging and second for VMotion on different subnets or VLANs. It’s recommended to use 10Gb network for FT Logging traffic along with Jumbo Frames.

- FT Maximums: You should not have more than 4 FT VMs per host which includes both Primary and Secondary VMs. A max of 16 vmdks per VM is allowed.

- NIC pass-through is allowed

In conclusion, while FT is a very cool feature of vSphere. It does put some restrictions which are hard to meet as many IT shops would prefer to use many of the features along with FT which are not compatible with FT. And the fact that it doesn’t work across datacenters or clusters actually is not helpful since many companies these days would like to use it for their DR solutions.

VMware HA

HA is the ability of VMware to power on VMs on another ESX host in case one or more hosts fail in a cluster. Some of the things that you need to know about VMware HA are:- HA Calculations: HA is based on what is called a “slot”. A slot is basically a reserved space for running a VM in the cluster. Two features that determine a slot are CPU and RAM used by VM. By default VMware HA selects the slot size to be the largest chunk of CPU and RAM used by a VM as shown in the diagram below. HA uses this to determine the number of slots available in the cluster to run the VMs in an HA event. The default selection can be changed by modifying the following advanced attributes:

das.slotMemInMB – Change the memory usage by a VM for slot calculations

das.slotCpuInMHz – Change CPU usage by a VM for slot calculations

- First 5 hosts in a cluster are designated as Primary for HA. At least 1 node in the cluster is selected as Active Primary.

- HA agent is installed on the ESX host and polls for heartbeat every second by default.

Failure is detected if no heartbeat is received in 15-seconds

Isolation Network is polled every 12 seconds after which host is designated as Isolated. Service Console default

gateway for ESX and VMkernel default gateway for ESXi are pinged for detecting isolation. You can also designate

other addresses by modifying das.isolationaddress include other destination in the detection process.

- Admission Control Policy: Ability to reserve resources for HA. You can use Host, VM Restart Priority to control

admission policy. VM Restart priorities are defined as

High – First to start. Use for database servers that will provide data for applications.

Medium – Start second. Application servers that consume data in the database and provide results on web pages.

Low – Start Last Web servers that receive user requests, pass queries to application servers, and return results to

users.

Disables admission control: If you select this option, virtual machines can, for example, be powered on even if that causes insufficient failover capacity. When this is done, no warnings are presented, and the cluster does not turn red. If a cluster has insufficient failover capacity, VMware HA can still perform failovers and it uses the VM Restart Priority setting to determine which virtual machines to power on first.

- Enable port fast (Cisco) on the switches as best practice for bypassing spanning tree delays.

- VM Monitoring – HA also has the ability to monitor VMs but requires that VMware Tools be installed and running.

Occasionally, virtual machines that are still functioning properly stop sending heartbeats. To avoid unnecessarily

resetting such virtual machines, the VM Monitoring service also monitors a virtual machine's I/O activity. If no heartbeats

are received within the failure interval, the I/O stats interval (a cluster-level attribute) is checked. The I/O stats interval

determines if any disk or network activity has occurred for the virtual machine during the previous two minutes (120

seconds). If not, the virtual machine is reset. This default value (120 seconds) can be changed using the advanced

attribute das.iostatsInterval.

VMware DRS – Distributed Resource Scheduler:

- DRS rules can be configured to allow VMs belonging to same environment to run on the same host for better performance. For example, web server talking to a database server for the same app can be on the same host.

- Using DRS, cluster can be configured for load-balancing or failover only.

VMware DPM – Distributed Power Management:

DPM works with DRS to manage power in the cluster. It enables powering off underutilized hosts by moving VMs to others hosts in the cluster and placing free hosts in standby mode.

Resources Pools & Shares:

- Resource Pools are hierarchical.

- Resource Pools are both providers and consumers of resources. Child consumes resources from Parent pools.

- Reservation: Tells ESX to power on a virtual machine only if there are enough unreserved resources. As a best practice, leave at least 10% resources unreserved.

- Shares are used to set Guaranteed Reservation for VMs.

- Shares are assigned values as High (4), Normal (2) and Low (1). An example of how to use these values is shown below:

- Limit:

Upper bound usage for CPU and RAM

Defaults to unlimited CPU (host’s maximum) and unlimited RAM (host’s maximum)

Specify the limit only if you have good reasons for doing

You can use the following advanced attributes to change lower bound for VPU and RAM

das.vmMemoryMinMB

das.vmCpuMinMHz

Storage VMotion:

- Storage VMotion is the ability of vSphere to migrate VM’s storage from one datastore to another in the same cluster. During Storage VMotion a virtual machine does not change execution host.

- Up to 2 migrations / host are allowed at a time.

VMware Data Recovery: Virtual Appliance based backup solution from VMware.

- Disk-based backup with de-dupe

- Useful for small and medium businesses (SMBs)

- Data Recover does not support using more than two backup destinations simultaneously

- De-duplicated store creates a virtual full backup based on the last backup image and applies the changes to it

- Data recovery backup appliance can protect a total of 100 virtual machines

- iSCSI/FC SAN storage is recommended with absolute minimum of 10GB of free space, having at least 50 GB is highly recommended for typical usage.

- Port 22024 must be open on ESX hosts.

NOTE: DR optimizations do not apply to virtual machines created with VMware products prior to vSphere 4.0. For example, change tokens are not used with virtual machines created with Virtual Infrastructure 3.5 or earlier. As a result, virtual machines created with earlier VMware versions take longer to back up.

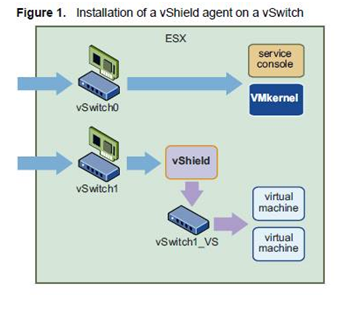

vShield Zones: An application layer firewall to protect VMs. Deployment is based on manager and agents VMs hat sits between VMs and vSwitch as shown in the diagram below.

Some of the things you should know about vShield Zones:- You will need to download vShield Manager OVF – Open Virtual Format and one vShield agent OVF templates.

- VMware Wall (firewall) does application-aware traffic analysis and stateful firewall protection by inspecting network traffic and determining access based on a set of rules between unprotected and protected zones.

- You can install a vShield agent on any vSwitch that homes a physical NIC.

- 3 Port Groups are created namely, VSprot_vShield-name, VSmgmt_vShield-name, and VSunprot_vShield-name.

- 40,000 concurrent sessions can be processed.

- vShield Zone cannot protect the Service Console or VMkernel components

- Works Cisco Nexus 1000V Series

Fiber Channel over Ethernet (FCoE): FCoE is the ability to make Fiber Channel Storage available over Ethernet fabrics by converting Fiber Channel commands to Ethernet commands and vice versa. vSphere has added this functionality however before you can use this feature you will need to add CNA (converged network adapter) to your server. http://www.internetworkexpert.org/2009/01/01/nexus-1000v-with-fcoe-cna-and-vmware-esx-40-deployment-diagram/ has some very detailed information about using FCoE. Here is a diagram that is a good overview of how FCoE can be setup.

Virtual Distributed Switches (vDS): vDS are new feature of VMware available only in vSphere (ESX 4) which allows the creation of virtual switches that span multiple hosts in a cluster or a across clusters in the same datacenters. This is pretty cool feature since it frees the ESX administrator from creating same vSwitch on different host multiple times in a cluster. However, this is just one the many new features that virtual distributed switch provides. Some of the others features are:

- vDS has separate control plane that is managed by vCenter

- vDS has separate data plane that is managed by ESX or vSphere kernel

- Ability to perform Network VMotion

- Configuration is possible Individually or at the host-level

- Virtual switches are abstracted into a single large vNetwork Distributed Switch that spans multiple hosts at the Datacenter level. Port Groups become Distributed Virtual Port Groups (DV Port Groups) that span multiple hosts and ensure configuration consistency for VMs and virtual ports necessary for such functions as VMotion.

- dvUplink is same as a standard vSwitch. You configure NIC teaming, load balancing, and failover policies on the vDS and DV Port Groups are applied to the dvUplinks and not the vmnics on individual hosts

- Private VLANs: You can configure private VLANs as follows, Promiscuous, Community, and Isolated. Communication between these private VLANs is shown in the following figure.

vApp: vApp is collection of VMs that run as a group. It is very useful if you want to run a system as one where application, database and web servers need to start and stop simultaneously.

vCenter Linked Mode: Allows connecting multiple vCenter servers together and managing all from a single vCenter server. It is not same as clustering vCenter servers.

VMSafe: A set of APIs and SDKs provided by VMware to allow third-party vendor to build security products around vSphere architecture for better performance and easy integration with VMs.

VM Hot Add Support & Hot Extend for VD (Dynamic Disks Only): Ability to add/increase resources such as vCPUs, Memory, and disk space while the VM is running. However, this greatly depends on the OS that VM is running as certain OS kernels don’t acknowledge addition of resources without a restart. Here is a nice chart that I came across while reading a blog from David Davis which is very helpful in determining whether a reboot is required or not. I am sorry I don’t have the link for the blog or name of the person who provided these results but many thanks to them for this useful information.

Also remember before you can use these features you need to upgrade VMware Hardware to version 7 and enable the support Hot Add for CPU and memory.

VMDirectPath & NPIV: Although not used by many because of many restrictions posed by these features, vSphere now allows VMs with direct access to PCI hardware devices via VMDirectPath. We can now also map LUNs directly from a VM using N-Port ID Virtualization using World Wide Names (WWNs) inside VMs. This is very useful when highly utilized VM needs to access storage directly for faster I/O.

PowerCLI: Finally, the most important feature of all is the new command line tool based on PowerShell which allows administrators to perform many of the repeating and tedious tasks using PowerShell based cmdlets.

No comments:

Post a Comment